A Personal Journey: From Early Computing Dreams to AGI

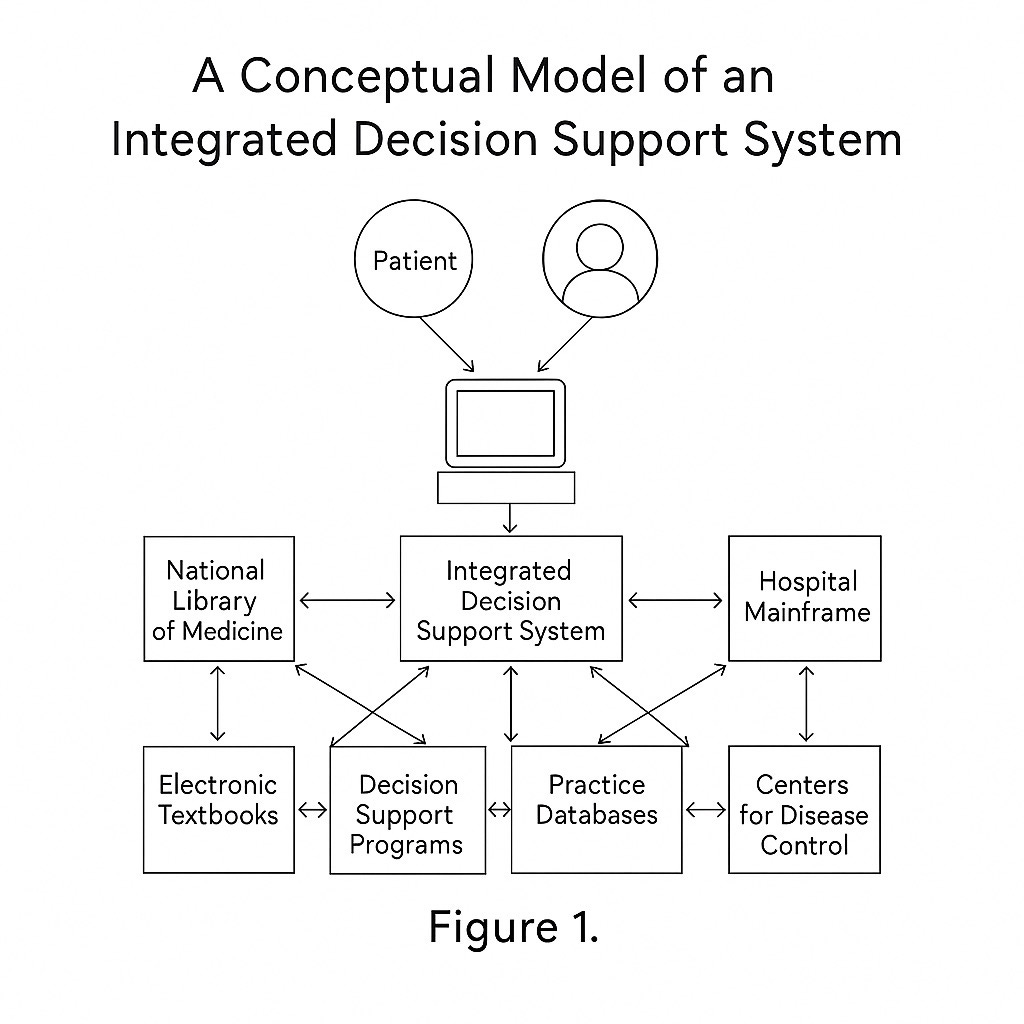

Back in 1988, when I was starting out in clinical informatics with my Leading Edge Model D 8088 computer, I had what seemed like a simple vision: Why couldn't we just "hook up" the National Library of Medicine, the CDC databases, my hospital's mainframe, and electronic textbooks like Scientific American Medicine into one interconnected system? Such a network could potentially answer any question about clinical medicine, public health, or medical research. It seemed so straightforward in concept—connect existing knowledge repositories and create something greater than the sum of its parts.

As it turned out, this wasn't nearly as simple as I had imagined. The technological barriers were immense, the data integration challenges overwhelming, and the conceptual framework for such systems barely existed. This realization sparked what would become a lifelong passion for understanding how we might create truly intelligent systems that could integrate and reason across vast domains of knowledge.

Now, decades later, we stand at a different frontier with Artificial General Intelligence. The vision has evolved far beyond my early dreams of connected medical databases, yet the fundamental questions remain: How do we create systems that can reason across domains? When might we achieve this breakthrough? And what will it mean for humanity when we do?

The New Reality of AGI Discussions

Artificial General Intelligence has transcended its traditional boundaries. No longer confined to academic papers or science fiction, conversations about AGI now permeate boardrooms, Senate hearings, and increasingly, our everyday discussions. Many experts describe feeling the possibility of AGI becoming "extremely visceral"—something they can almost "see" on the horizon. But what exactly are we talking about when we discuss AGI? When might this technological watershed arrive? And perhaps most importantly, what implications does it hold for humanity—both as individuals and as a collective society?

Understanding AGI: Beyond Simple Definitions and Dutch Elm Disease

During my graduate school days at Stanford, where I was working diligently on building a diagnostic expert system, my wife gave me a cartoon that I've kept to this day. It showed a doctor and patient huddled over a computer, with the doctor saying, "I typed your symptoms into the computer and it says you have 'Dutch Elm disease'!" That cartoon has stayed with me through decades of technological advancement—a humbling reminder of both the promise and the limitations of artificial intelligence in medicine.

This anecdote illustrates a fundamental challenge we still face with AI systems: the gap between specialized knowledge and true understanding. Our early expert systems could follow programmed rules and make impressive-looking diagnoses, but they lacked the general intelligence to recognize when they'd wandered completely outside the realm of human ailments and into arboreal diseases!

When we talk about AGI today, we're discussing something far beyond those early rule-based systems. AGI isn't defined by rigid parameters, and the concept has deep historical roots dating back to the very birth of artificial intelligence in 1956. Initially, researchers conceived AGI as artificial intelligence capable of matching or exceeding human cognitive abilities across diverse tasks. The term later gained momentum as what senior researchers called a "refreshing call for ambitious projects" that moved beyond narrow AI applications—like my early diagnostic system that couldn't distinguish between human and tree diseases.

Different perspectives exist about what constitutes AGI's arrival. Some define it as AI that dramatically exceeds human performance in a limited set of tasks. Others speak of "Exceptional AGI" as systems matching the capabilities of the 99th percentile of skilled adults across a wide spectrum of non-physical and metacognitive tasks. The most serious concerns about AGI risks often center on systems achieving this higher level of capability.

At its core, AGI represents the creation of machines that can think, reason, and plan with general capabilities rather than specialized functions. Many experts suggest the "G" in AGI implies systems that are far less specialized and demonstrate more "coherent abilities" across domains—more akin to human general intelligence than today's task-specific AI systems. In other words, AGI wouldn't just tell you that you have Dutch Elm disease; it would understand why that diagnosis is absurd for a human patient.

Both AGI and its potential successor, "superintelligence," represent technologies that could fundamentally transform society in ways comparable to the Industrial Revolution. This transformation carries dual possibilities: revolutionary improvements in healthcare, education, and living standards, alongside significant risks including severe harm and potentially existential threats to humanity.

The Timeline Question: When Might AGI Arrive?

Predicting AGI's arrival involves substantial uncertainty. However, a "broad range of timelines"—including notably short ones—are considered plausible by experts. When researchers discuss "short timelines," they typically mean Transformative AI (TAI) or Exceptional AGI arriving within the next decade, often before 2035 or specifically by 2030. While these shorter timelines aren't yet mainstream views, they're "gaining real traction" among experts and forecasters.

Consider these perspectives from the research:

Some researchers believe AGI by 2027 is "strikingly plausible." This view often follows trendlines in computational scaling, algorithmic efficiency improvements, and "unhobbling" gains that suggest another major qualitative leap in AI capabilities may be imminent.

Analysis of recent performance data, particularly findings from the "bombshell" METR paper, demonstrates that the capability of generalist autonomous agents has been doubling approximately every 7 months for the last 6 years. This is measured by the complexity of human professional tasks these systems can complete with 50% reliability. If we extrapolate this exponential trend—even with appropriate caution about its certainty—it suggests AI could be capable of completing tasks currently taking humans over a month sometime between late 2028 and early 2031. Even if the absolute measurements contain errors, this trend indicates agents handling week-long or month-long tasks within a decade.

Forecast models based on compute scaling suggest AGI could be achieved around February 28, 2030. Another probability model gives a low chance this year (0.1%), but higher probabilities by the end of 2026 (~2%) and the end of 2027 (~7%).

While recent median expert predictions for Transformative AI often fall within the next 10-40 years, the trend has been consistently shifting toward shorter timelines.

What Could Drive Such Rapid Progress? Lessons from My Journey

When I reflect on my own journey from that 8088 computer to today's AI landscape, I'm struck by the exponential nature of technological change. The computer that powered my early clinical informatics work had less processing capability than today's digital wristwatch. The leaps we've seen since then inform my thinking about what might drive AGI's development in the coming years.

Several key factors could accelerate AGI development far beyond what I imagined possible in those early days:

Computational Scaling: I've watched compute power grow from kilobytes to petabytes during my career. Today, we're simply applying more processing power to train AI models, which has been a primary driver of recent advances. This approach has been bolstered by trends like Moore's Law and massive investment, with discussions evolving from $10 billion to $100 billion to even trillion-dollar compute clusters. This scaling factor provides much of the plausibility behind shorter timeline predictions. National security concerns could potentially accelerate this further, leading to unprecedented industrial mobilization—something I've seen happen repeatedly when technology becomes strategically important.

Recursive Improvement: Sometimes called Direct Recursive Improvement (DRI), this refers to AI systems contributing to AI research and development themselves, potentially creating a powerful feedback loop. Back when I was building expert systems by hand, the idea that the system itself could improve its own code seemed far-fetched. Yet now, if AGI can automate significant portions of AI research, this could compress a decade of algorithmic progress into a year or less—dramatically accelerating development beyond anything we've seen before.

Alternative Pathways: During my career, I've seen numerous approaches fall in and out of favor—from rule-based systems to neural networks to statistical methods, and now back to neural networks in more sophisticated forms. Other approaches like hybrid or networked AI systems could also potentially reach high capabilities. The emergence of "Agentic AI"—systems that can make plans, perform multi-step tasks, and interact with their environment with minimal human oversight—represents what some see as a significant step beyond current large language models. These developments remind me of how our early dreams of medical expert systems are finally coming to fruition, though in forms we couldn't have anticipated.

The Hype Factor: Maintaining Perspective

We should acknowledge the potential for "hype" in AI predictions. Using Gartner's Hype Cycle framework, Generative AI was estimated to have just passed its peak of inflated expectations in November 2024. Historically, over-hyped projections have contributed to previous "AI winters" where progress and funding stalled. Predictions about technologies like fully deployed self-driving trucks have often proven "wildly optimistic" in the past. Therefore, while short timelines deserve serious consideration, maintaining intellectual humility remains essential.

Nevertheless, the sentiment from many researchers is clear: we are in the "midgame now," and exponential growth is "in full swing." A substantial contingent believes we are on a path to AGI by 2027, potentially advancing toward "superintelligence, in the true sense of the word" by decade's end. The potential for exponential growth to trigger "explosive progress" in the near future carries profound implications for AI safety planning.

Preparing Ourselves and Society: Avoiding Dutch Elm Diagnoses at Global Scale

Given the potential for rapid and profound change, how can individuals and society prepare? Having worked at the intersection of technology and healthcare for decades, I've seen how unprepared systems can lead to consequential mistakes—from my cartoon's Dutch Elm disease misdiagnosis to real-world medication errors. The stakes with AGI will be incomparably higher, and my experiences inform how I view our collective preparation needs.

For Society and Governments: Lessons from Healthcare Systems

Prioritize Safety and Governance: In healthcare informatics, we learned—sometimes the hard way—that safety must be built in from the beginning, not added as an afterthought. As AI systems become increasingly capable, safety research, proactive governance frameworks, and robust impact monitoring grow correspondingly important. This process should involve early engagement with policymakers and civil society leaders to develop appropriate oversight mechanisms. I've seen how medical software that lacked proper governance created serious patient safety risks; with AGI, such risks could scale to affect millions.

Develop Comprehensive Risk Management: Throughout my career implementing clinical systems, I've observed that the most successful deployments included structured risk management from day one. There's growing recognition of the need for similar approaches to managing AI risks, especially for general-purpose AI and foundation models. Stakeholder engagement from the earliest development stages becomes crucial for identifying potential impacts across diverse sectors—something we learned was essential when introducing new technologies into healthcare environments.

Address Specific Risk Categories: My work in medical informatics taught me that successful risk mitigation requires categorizing and addressing distinct types of risks. For AGI, proactive planning must address several risk dimensions:

Misuse prevention: Creating robust systems to block malicious actors from weaponizing advanced AI capabilities—similar to how we develop safeguards for dangerous pharmaceuticals

Misalignment mitigation: Ensuring we fully understand AI actions and can create hardened environments that prevent harmful outcomes—much like how we've had to design medical software with guardrails against dangerous orders

Existential threat reduction: Addressing the most severe potential risks to humanity's long-term future

Ethical safeguards: Preventing capabilities that enable mass surveillance, propaganda, or other social harms—concerns that echo privacy protections we've built into health information systems

Economic disruption management: Developing policies to address potential job displacement and economic transformation

Gradual disempowerment prevention: Addressing the risk that incremental AI progress could erode human influence over key societal systems—a process I've witnessed in miniature as automated systems sometimes quietly shifted decision-making away from clinicians

Foster International Cooperation: My involvement with international medical informatics standards taught me how challenging—yet essential—global coordination can be. Multiple sources emphasize that humanity remains unprepared for the scale of potential global AI challenges and lacks adequate mechanisms for effective international cooperation. There's urgent need for international agreements and proactive measures, especially given the potential for vastly superhuman capabilities emerging relatively soon.

Address National Security Implications: Having consulted on healthcare technology for government agencies, I've seen how quickly national security concerns can reshape technological priorities. The race toward AGI is increasingly framed as a national security issue. Some analysts foresee government involvement accelerating, potentially launching large-scale projects to develop superintelligence as critical national defense priorities. Preventing advanced AI proliferation to adversarial states represents a significant security concern.

Manage Expectations and Communication: Throughout my career, I've seen how both excessive hype and unwarranted dismissal of new technologies can be harmful. AI professionals must question hype while helping the broader public understand the distinction between realistic projections and inflated claims. Learning from past "AI winters" caused by unrealistic expectations remains essential. The public already shows growing awareness and concern about AI's impact, particularly regarding employment.

For Individuals: Personal Adaptations from My Professional Journey

In my decades of work bridging clinical medicine and computer science, I've had to continually reinvent my skill set as technologies evolved. My journey from that 8088 computer to today's AI landscape offers some personal insights on how individuals might prepare for the AGI transition:

Develop Information Literacy: When I began in clinical informatics, simply accessing relevant information was a major challenge. Today, the challenge has inverted—we're drowning in information. Staying informed about AGI developments and debates provides crucial context for navigating this rapidly evolving landscape. I've found that developing systematic ways to filter and evaluate information has been essential throughout my career.

Build AI Literacy: When I showed colleagues that "Dutch Elm disease" cartoon years ago, most physicians had no concept of how expert systems worked or why they might make absurd errors. Today, it's becoming increasingly important for everyone to interact confidently, critically, and safely with AI systems. This means understanding what AI can and cannot do, how it functions at a conceptual level, its fundamental challenges and ethical considerations, and maintaining focus on human agency and control. AI literacy is increasingly recognized as a "key competency for work, everyday life, and lifelong learning"—something I've seen become progressively more important in healthcare settings.

Cultivate Complementary Skills: Throughout my career, I've observed that the most resilient professionals weren't those who specialized narrowly in soon-to-be-automated tasks, but those who developed abilities that complemented emerging technologies. Focus on developing abilities that complement rather than compete with AI capabilities—areas like creativity, emotional intelligence, ethical reasoning, and interpersonal leadership. In my field, the physicians who thrived with computerization were those who used technology to enhance their human connections with patients, not replace them.

Prepare for Labor Market Evolution: When I began working with electronic health records, many predicted the immediate elimination of medical transcriptionists. The reality proved much more complex and gradual. While predictions about job displacement often prove "wildly optimistic" in their timeframes, AI has already begun transforming employment patterns—eliminating some roles while creating others. Familiarity with AI tools and how to effectively manage them is becoming an increasingly valuable professional skill. My own career trajectory from physician to informatics specialist to AI researcher demonstrates how adapting to technological change can create unexpected opportunities.

Engage with Policy Development: Some of my most consequential work has involved shaping policies for health information technology. Advocate for regulations designed to ensure AGI benefits humanity broadly rather than narrowly serving particular interests. The voices of thoughtful individuals with both technical understanding and domain expertise will be crucial in developing wise governance frameworks.

Understand Personal Risk Exposure: Working in healthcare taught me how privacy risks evolved with technology. With each new system, we had to develop new approaches to protecting sensitive information. Similarly, individuals should develop awareness of potential personal risks including privacy concerns, misinformation vulnerability, and new forms of online harassment enabled by AI tools. Remember that generative AI models sometimes leak training data or "hallucinate" inaccurate information (much like that Dutch Elm disease diagnosis!), making critical thinking skills increasingly valuable.

The Path Forward: From Medical Informatics to AGI

When I look back at that eager young physician with his 8088 computer, dreaming of interconnected medical knowledge systems, I realize how far we've come—and how much further we still have to go. The advent of AGI carries no certainty on any fixed timeline, but the possibility of its arrival within this decade merits serious consideration. The technological drivers for rapid progress exist, and the potential impacts—both beneficial and harmful—are immense.

In many ways, my journey through clinical informatics mirrors our collective journey toward AGI. We started with grand visions that proved more challenging than anticipated. We encountered failures and setbacks—like those Dutch Elm disease diagnoses—that taught us humility. We witnessed both over-hyped promises and surprising breakthroughs. And through it all, we've had to continually balance technological capability with human values and needs.

We stand at a critical juncture where decisions made today will substantially shape AI's future trajectory. This isn't merely another technology cycle or investment opportunity—it represents something potentially far more consequential for humanity's future than anything I've witnessed in my decades-long career. Staying informed, advocating for responsible development practices, and preparing ourselves with necessary skills and understanding represent crucial steps as we navigate the exciting, uncertain, and potentially world-altering path toward Artificial General Intelligence.

The cartoon of the computer diagnosing Dutch Elm disease still hangs in my office—a reminder that as we build ever more powerful systems, we must never lose sight of their limitations or our responsibility to guide their development wisely. My personal journey from that first clinical expert system to today's AI landscape has taught me that technological progress is inevitable, but its direction and impact remain very much in human hands.

Great article Blackford. Thanks